IBL News | New York

Fast-scaling and hyped technologies don’t invariably fulfill their promise, especially if they don’t generate significant revenue. In this regard, what if generative AI turns out to be a dud?

Programmers and undergrads who use generative tools as code assistants and for writing text are not deep-pocketed.

This is the core idea that the Co-Founder of the Center for the Advancement of Trustworthy AI, Gary Marcus, well known for his testimony on AI oversight at the US Senate, defends in a viral article.

“Neither coding nor high-speed, mediocre quality copy-writing is remotely enough to maintain current valuation dreams,” he wrote.

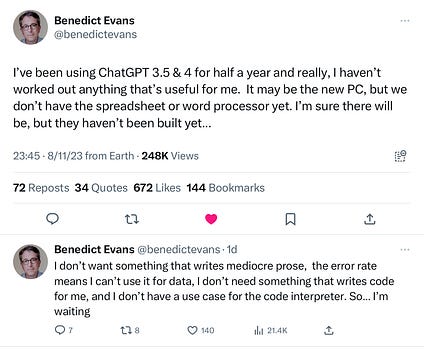

Other voices like venture capitalist Benedict Evans have raised a similar approach this in a series of posts:

“My friends who tried to use ChatGPT to answer search queries to help with academic research have faced similar disillusionment,” Gary Marcus stated. “A lawyer who used ChatGPT for legal research was excoriated by a judge, and basically had to promise, in writing, never to do so again in an unsupervised way.”

“In my mind, the fundamental error that almost everyone is making is in believing that Generative AI is tantamount to AGI (general purpose artificial intelligence, as smart and resourceful as humans if not more so).”

His doubts are rooted in the unsolved problem of the tendency to confabulate (hallucinate) false information at the core of generative AI.

AI researchers still cannot guarantee that any given system will be honest, harmless, and non-biased, as Sam Altman, the CEO of OpenAI [in the picture], recently said.

“If hallucinations aren’t fixable, generative AI probably isn’t going to make a trillion dollars a year,” Gary Marcus predicted.

.