IBL News | New York

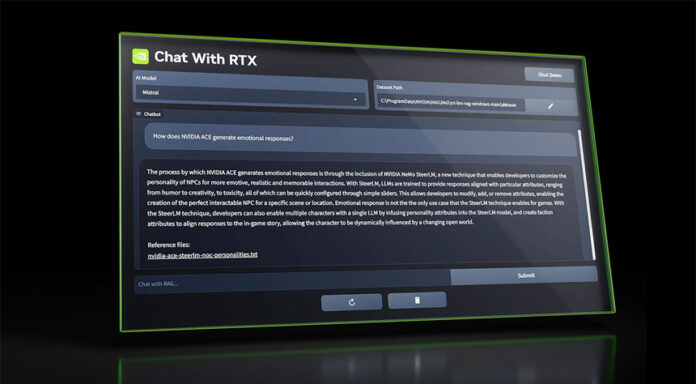

NVIDIA introduced yesterday a personalized demo chatbot app called Chat With RTX that runs locally on RTX-Powered Windows PCs providing fast and secure results.

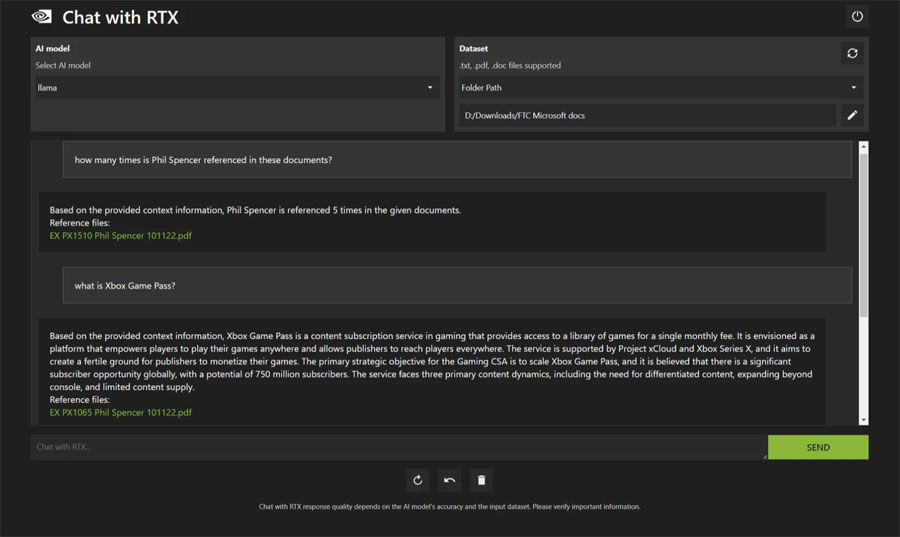

This early version allows users to personalize a LLM connected to their own content—docs, notes, videos, or other data. It leverages retrieval-augmented generation (RAG), TensorRT-LLM, and RTX acceleration so users can query a custom chatbot to quickly get contextually relevant answers.

Available to download, with 35GB’s installer, NVIDIA’s Chat With RTX requires Windows 11 and a GPU with NVIDIA GeForce RTX 30 or 40 Series GPU or NVIDIA RTX Ampere or Ada Generation GPU with at least 8GB of VRAM.

With this app tailored for searching local documents and personal files, users can feed it YouTube videos and their own documents to create summaries and get relevant answers based on their own data analyzing collection of documents as well as scanning through PDFs.

Chat with RTX essentially installs a web server and Python instance on a PC, which then leverages Mistral or Llama 2 models to query the data. It doesn’t remember context, so follow-up questions can’t be based on the context of a previous question.

The installation is 30 minutes long, as The Verge analyzed.

It takes an hour to install the two language models — Mistral 7B and LLaMA 2— and they required 70GB.

Once it’s installed, a command prompt window launches with an active session, and the user can ask queries via a browser-based interface.

.

🚨 BREAKING: Nvidia just released Chat with RTX, an AI chatbot that runs locally on your PC.

It can summarize or search documents across your PC’s files and even YouTube videos and playlists.

The chatbot runs locally, meaning results are fast, you can use it without the… pic.twitter.com/je3gzOs45I

— Rowan Cheung (@rowancheung) February 13, 2024

En Español

En Español