IBL News | New York

OpenAI started to roll out, through a waitlist, to API users its most advanced model GPT-4.

The company described GPT-4 as “a large multimodal model (accepting image and text inputs, emitting text outputs) that, while less capable than humans in many real-world scenarios, exhibits human-level performance on various professional and academic benchmarks.”

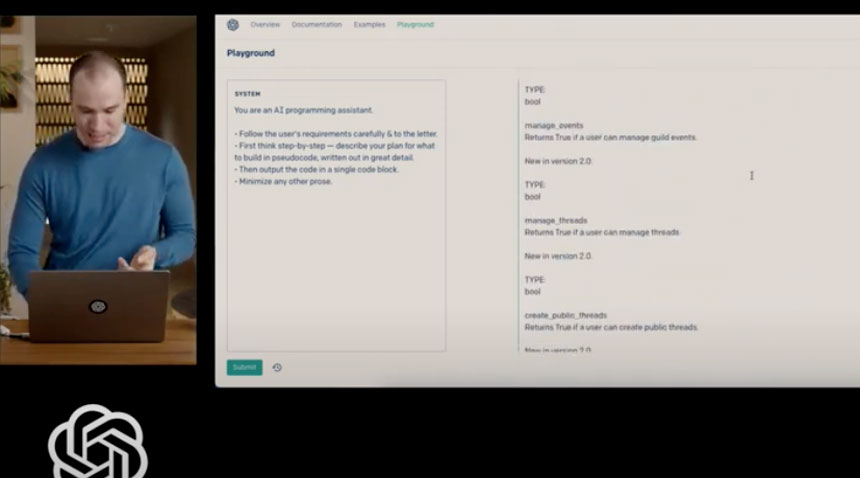

Greg Brockman, President and Co-Founder of OpenAI showcased GPT-4 and some of its capabilities/limitations through a 24 minutes live broadcast on YouTube (video below). In addition, a blog post detailed the model’s capabilities and limitations, including eval results.

“GPT-4 can solve difficult problems with greater accuracy, thanks to its broader general knowledge and advanced reasoning capabilities,” said the company.

“It can generate, edit, and iterate with users on creative and technical writing tasks, such as composing songs, writing screenplays, or learning a user’s writing style.”

OpenAI opened an API Waitlist to get access to the GPT-4 API. It gave priority access to developers who contribute to model evaluations to OpenAI Evals.

Paid users of ChatGPT Plus were offered GPT-4 access on chat.openai.com with a usage cap.

The pricing of the API was the following:

• gpt-4 with an 8K context window (about 13 pages of text) will cost $0.03 per 1K prompt tokens, and $0.06 per 1K completion tokens.

• gpt-4-32k with a 32K context window (about 52 pages of text) will cost $0.06 per 1K prompt tokens, and $0.12 per 1K completion tokens.

– The New York Times: GPT-4 Is Exciting and Scary

En Español

En Español