IBL News | New York

NVIDIA released today an open-source software called NeMo Guardrails that can prevent AI chatbots from “hallucinating” wrong facts — such as saying incorrect facts, talking about harmful subjects, or opening up security holes.

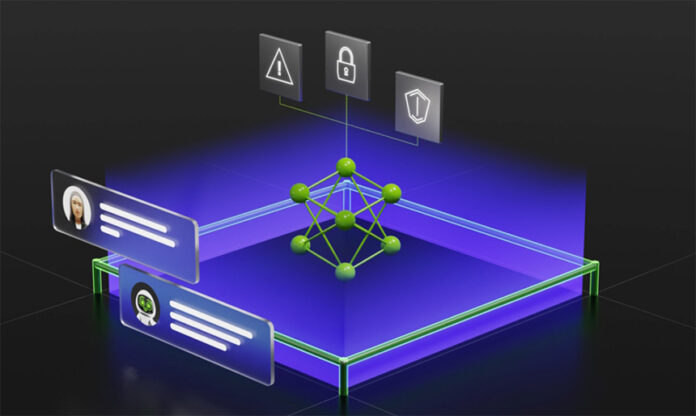

It’s a layer of software that sits between the user and the LLM (Large Language Model) or other AI tools. It heads off bad outcomes or bad prompts before the model spits them out.

The “hallucination” issue with the latest generation of large language models is currently a major blocking point for businesses.

The company designed this software to work with all LLM-based conversational applications, including OpenAI’s ChatGPT and Google’s Bard.

NeMo Guardrails enables developers to define user interactions and integrate these guardrails into any application using a Python library.

It can run on top of LangChain, an open-source toolkit used to plug third-party applications into LLMs.

In addition, it works with Zapier and a broad range of LLM-enabled applications.

Developers can create new rules quickly with a few lines of code by setting three kinds of boundaries:

- Topical guardrails prevent apps from veering off into undesired areas. For example, they keep customer service assistants from answering questions about the weather.

- Safety guardrails ensure apps respond with accurate, appropriate information. They can filter out unwanted language and enforce that references are made only to credible sources.

- Security guardrails restrict apps from making connections only to external third-party applications known to be safe.

“You can write a script that says, if someone talks about this topic, no matter what, respond this way,” said Jonathan Cohen, Vice President of Applied Research at NVIDIA.

• “You don’t have to trust that a language model will follow a prompt or follow your instructions. It’s actually hard coded in the execution logic of the guardrail system what will happen.”

• “If you have a customer service chatbot, designed to talk about your products, you probably don’t want it to answer questions about our competitors.”

• “You want to monitor the conversation. And if that happens, you steer the conversation back to the topics you prefer.”

The company said, “Much of the NeMo framework is already available as open-source code on GitHub. Enterprises also can get it as a complete and supported package, part of the NVIDIA AI Enterprise software platform. NeMo is also available as a service. It’s part of NVIDIA AI Foundations, a family of cloud services for businesses that want to create and run custom generative AI models based on their own datasets and domain knowledge.”

NVIDIA, which tries to maintain its lead in the market for AI chips by simultaneously developing software for machine learning, mentioned the case of South Korea’s leading mobile operator who built an intelligent assistant that’s had 8 million conversations with its customers, as well as a research team in Sweden that employed NeMo to create LLMs that can automate text functions for the country’s hospitals, government and business offices.

Other AI companies, including Google and OpenAI, have used a method called reinforcement learning from human feedback to prevent harmful outputs from LLM applications. This method uses human testers who create data about which answers are acceptable or not and then train the AI model using that data.

• NVIDIA Developer Blog: NVIDIA Enables Trustworthy, Safe, and Secure Large Language Model Conversational Systems

[Disclosure: IBL Education, the parent company of IBL News, works for NVIDIA on projects related to Online Education]

En Español

En Español