IBL News | New York

OpenAI announced yesterday it started to add new voice conversation and image capabilities in ChatGPT.

Paid ChatGPT Plus and Enterprises will see these features in the next two weeks.

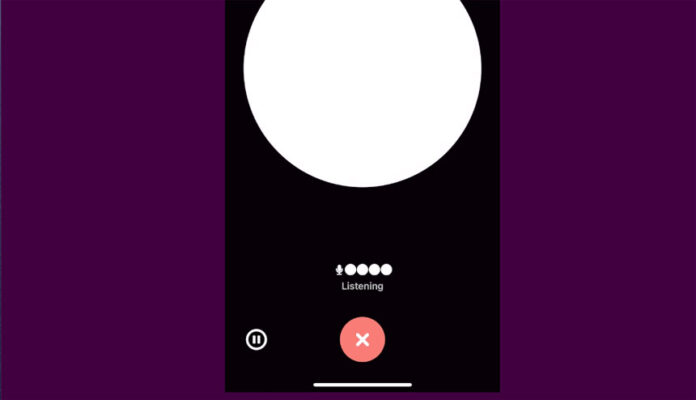

ChatGPT includes a new interface ready for snapping images and having live conversations.

The company provided this example: When you’re home, snap pictures of your fridge and pantry to figure out what’s for dinner (and ask follow-up questions for a step-by-step recipe). After dinner, help your child with a math problem by taking a photo, circling the problem set, and having it share hints with both of you.

Voice is also coming on iOS and Android (opt-in in your settings) and images will be available on all platforms.

To get started with voice, the user has to head to Settings → New Features on the mobile app and opt into voice conversations.

The new voice capability is powered by a new text-to-speech model, capable of generating human-like voices from just text and a few seconds of sample speech.

OpenAI collaborated with professional voice actors to create each of the voices.

The company also uses Whisper, its open-source speech recognition system, to transcribe your spoken words into text.

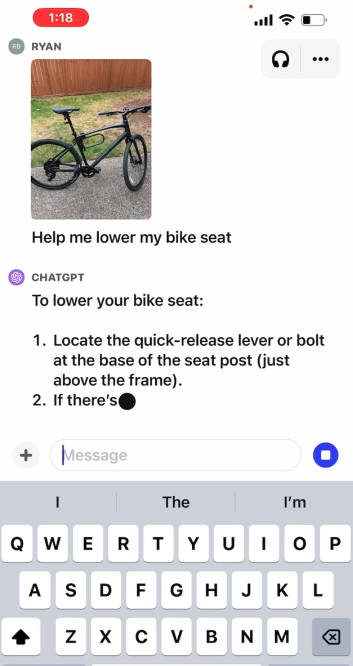

Additionally, ChatGPT is powered by image understanding, enabled by multimodal GPT-3.5 and GPT-4. These models apply their language reasoning skills to a wide range of images, such as photographs, screenshots, and documents containing both text and images.

A provided example points out this: “Troubleshoot why your grill won’t start, explore the contents of your fridge to plan a meal or analyze a complex graph for work-related data. To focus on a specific part of the image, you can use the drawing tool in our mobile app.”

OpenAI announced that it is already collaborating with Spotify to pilot its Voice Translation feature, which helps podcasters translate podcasts into additional languages in the podcasters’ own voices.

Regarding these advancements, OpenAI said that its “goal is to build AGI that is safe and beneficial. We believe in making our tools available gradually, which allows us to make improvements and refine risk mitigations over time while also preparing everyone for more powerful systems in the future. This strategy becomes even more important with advanced models involving voice and vision.”

En Español

En Español