IBL News | New York

Stanford University, MIT, and Princeton researchers ranked ten major AI models on how openly they operate after applying a newly created scoring system.

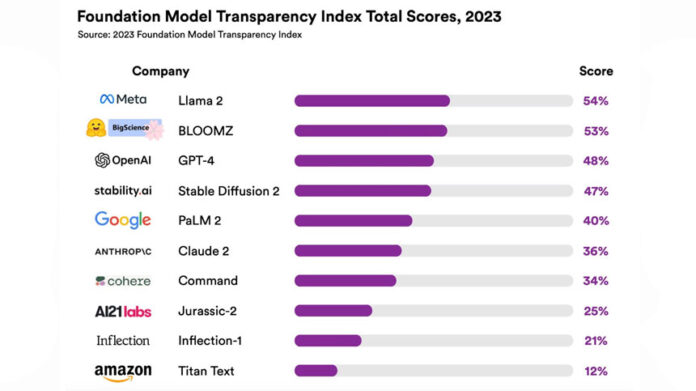

Included in the index are popular models like OpenAI’s GPT-4 (which powers the paid version of ChatGPT), Google’s PaLM 2 (which powers Bard), and Meta’s LLaMA 2. It also includes lesser-known models like Amazon’s Titan Text and Inflection AI’s Inflection-1, the model that powers the Pi chatbot.

Three systems in the list (Meta, Hugging Face, and Stability AI) develop open foundation models (Llama 2, BLOOMZ, and Stable Diffusion 2, respectively), and the other seven developers built closed foundation models accessible via an API.

Llama 2 led at 54%, GPT-4 placed third at 48%, and PaLM 2 took fifth at 40%. See the ranking in the table above.

The Stanford ‘Foundation Model Transparency Index’ paper research featured 100 indicators, including the social aspects of training foundation models (the impact on labor, environment, and the usage policy for real-world use) in addition to technical aspects (data, computing, and details about the model training process).

“The indicators are based on, and synthesize, past interventions aimed at improving the transparency of AI systems, such as model cards, datasheets, evaluation practices, and how foundation models intermediate a broader supply chain,” explained one of the authors in a blog post.

“Transparency is poor on matters related to how models are built. In particular, developers are opaque on what data is used to train their model, who provides that data and how much they are paid, and how much computation is used to train the model.”

The researchers released a repository with all of their analysis on our GitHub repository as well.

The New York Times reported that several AI companies have already been sued by authors, artists, and media companies, accusing them of illegally using copyrighted works to train their models.

So far, most of the lawsuits have targeted open-source AI projects or projects that disclosed detailed information about their models.

En Español

En Español