IBL News | New York

OpenAI this week announced a partnership with San Francisco–based startup Scale in order to offer enterprise-grade fine-tuning capabilities.

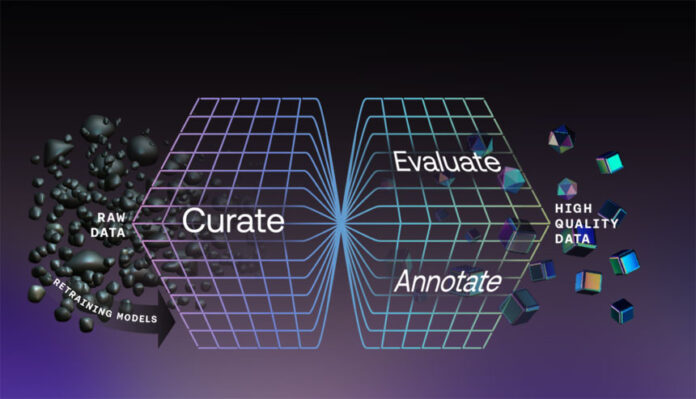

With fine-tuning processes, companies can customize models on proprietary data for AI to optimize the performance of LLM. It requires rigorous data enrichment and model evaluation.

OpenAI recently launched fine-tuning for GPT-3.5 Turbo and will bring fine-tuning to GPT-4 this fall.

A pilot project of fine-tuning GPT-3.5 will be Brex, a financial services company that has been using GPT-4 for memo generation. Now, this firm wants to explore it if they can improve cost and latency while maintaining quality by using a fine-tuned GPT-3.5 model.

Scale explains that it prepares and enhances enterprise data with its Scale Data Engine. Then, it fine-tunes GPT-3.5 with this data and further customizes models with plugins and retrieval augmented generation, or the ability to reference and cite your proprietary documents in its responses. Scale then leverages its Test and Evaluation platform and trained domain experts with the goal of achieving performance and following safety requirements.

“Its AI’s software package Nucleus enables firms to quickly identify and fix mislabeled data, or refine existing data labels to improve algorithmic training and boost an AI system’s performance,” said the company founder and CEO, the 24-years old billionaire Alexandr Wang.

.

En Español

En Español