IBL News | New York

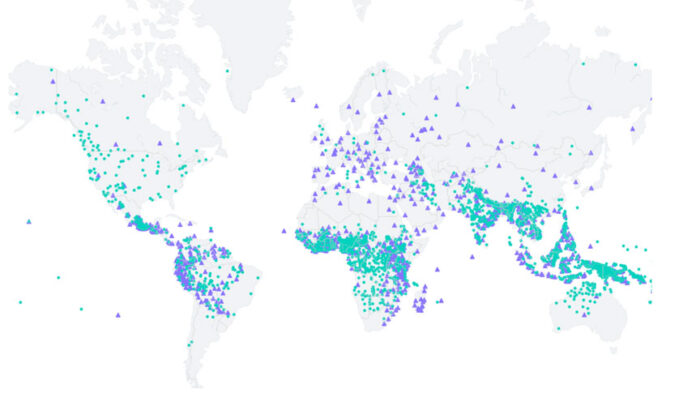

Meta released this week as open-source software an AI model called Massively Multilingual Speech (MMS) that can recognize over 4,000 spoken languages and produces text-to-speech in over 1,100 languages.

Today, existing speech recognition models only cover approximately 100 languages — a fraction of the 7,000+ known languages spoken on the planet.

Machines with the ability to recognize and produce speech can make information accessible to many more people, including those who rely entirely on voice to access information.

Speech recognition and text-to-speech models typically require training on thousands of hours of audio with accompanying transcription labels.

“Through this work, we hope to make a small contribution to preserve the incredible language diversity of the world,” said Meta.

Meta combined wav2vec 2.0, its self-supervised learning, and a new dataset that provides labeled data for over 1,100 languages and unlabeled data for nearly 4,000 languages.

To collect audio data for thousands of languages, Meta turned to religious texts, such as the Bible, that have been translated into many different languages and whose translations have been widely studied for text-based language translation research.

These translations have publicly available audio recordings of people reading these texts in different languages. As part of this project, Meta created a dataset of readings of the New Testament in over 1,100 languages, which provided, on average, 32 hours of data per language.

By considering unlabeled recordings of various other Christian religious readings, Meta increased the number of languages available to over 4,000.

“We also envision a future where a single model can solve several speech tasks for all languages. While we trained separate models for speech recognition, speech synthesis, and language identification, we believe that in the future, a single model will be able to accomplish all these tasks and more, leading to better overall performance,” Meta said.

En Español

En Español