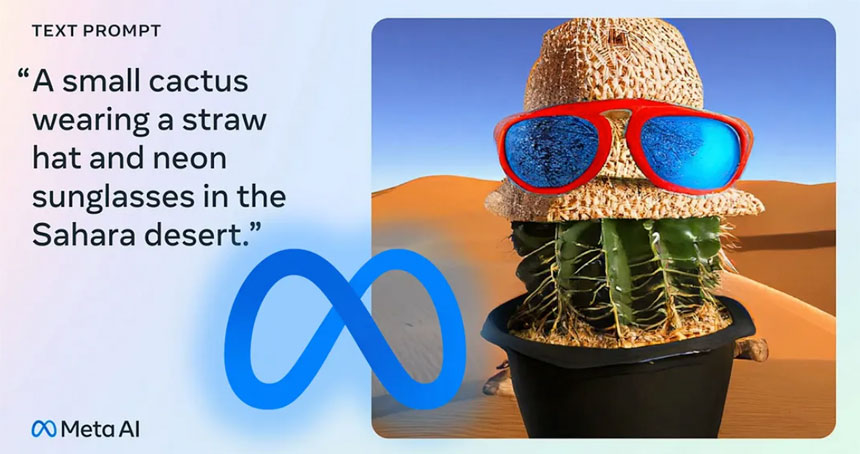

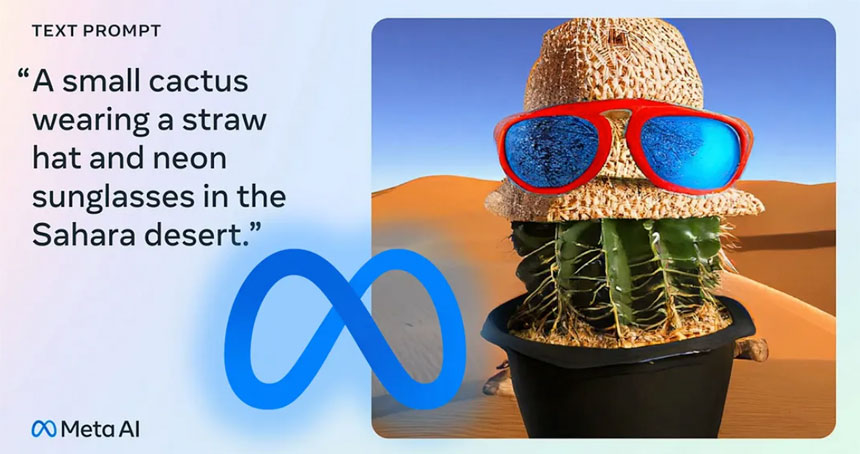

Meta Announced CM3LEON, An Advanced AI Model for Text and Images

July 21, 2023

July 21, 2023

Education technology today is marked by rising AI adoption among educators and innovative personalized learning approaches.

Loading videos...

AI is transforming the education technology landscape as more teachers adopt intelligent tools, driving forward and adaptive learning experiences.

The initiative aims to support science, technology, engineering, and mathematics education.

Leaders from around the world discuss the future of remote and hybrid learning models.

Research indicates significant long-term academic and social advantages for students.