IBL News | New York

Hallucination in AI, which happens when the model makes stuff up, is the number one roadblock corporations see to adopt LLMs (Large Language Models), according to companies such as Anthropic, Langchain, Elastics, Dropbox, and others.

Reducing and measuring hallucination, along with optimizing contexts, incorporating multimodality, and GPU alternatives, and increasing usability are among the top ten challenges and major research directions today, expert Chip Huyen wrote in an insightful article. Furthermore, many startups are focusing on these problems.

1. Reduce and measure hallucinations

2. Optimize context length and context construction

3. Incorporate other data modalities

4. Make LLMs faster and cheaper

5. Design a new model architecture

6. Develop GPU alternatives

7. Make agents usable

8. Improve learning from human preference

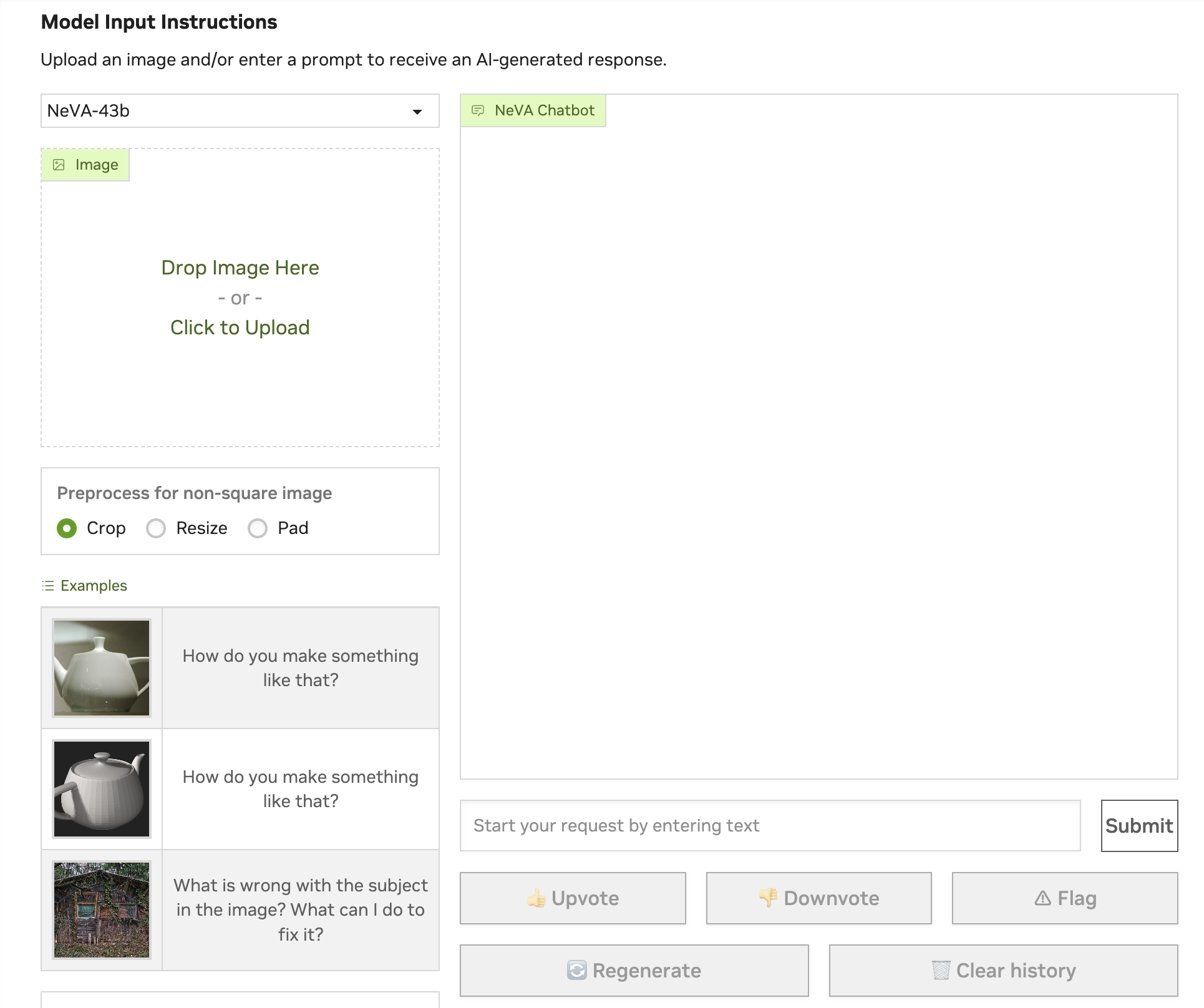

9. Improve the efficiency of the chat interface

10. Build LLMs for non-English languages

Some interesting ideas mentioned in the article:

• Ad-hoc tips to reduce hallucination include adding more context to the prompt, a chain of thought, self-consistency, or asking your model to be concise in its response.

• Context learning, length, and construction have emerged as predominant patterns for the LLM industry, as they are critical for RAG – Retrieval Augmented Generation.

• Multimodality promises a big boost in model performance.

• Developing a new architecture to outperform Transformer isn’t easy, as Transformer has been so heavily optimized over the last six years.

• Develop GPU alternatives like Quantum virtual computers continues attracting hundreds of millions of dollars.

• Regardless the excitement around Auto-GPT and GPT-Engineering, there is still doubt about whether LLMs are reliable and performant enough to be entrusted with the power to act.

• The most notable startup in this area is perhaps Adept, which has raised almost half a billion dollars to date. [Video below]

• DeepMind tries to generate responses that please the most people.

• There are certain areas where the chat interface can be improved for more efficiency. Nvidia’s NeVA chatbot is one of the most mentioned examples.

• The most notable startup in this area is perhaps is Adept, which has raised almost half a billion dollars to date. [Video below]

• Current English-first LLMs don’t work well for many other languages, both in terms of performance, latency, and speed. Building LLMs for non-English languages opens a new frontier.

Symato might be the biggest community effort today.

.

En Español

En Español