IBL News | New York

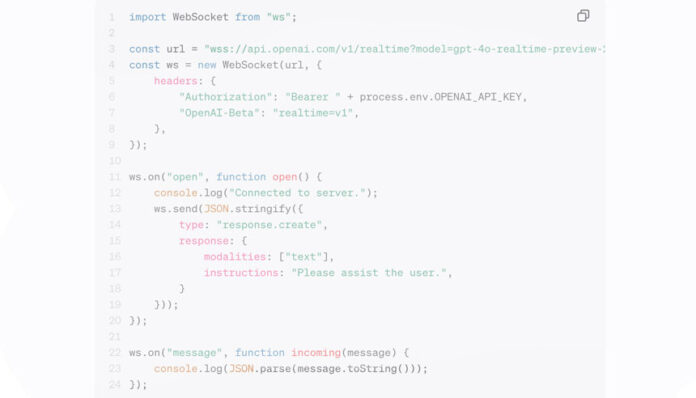

OpenAI announced several tools this week, including a public beta of its “Realtime API” for building nearly real-time multi-modal conversational and AI-generated voice response apps.

It currently supports text and audio as input and output, as well as function calling.

These low-latency responses use only six voices, not third-party voices, to prevent copyright issues.

This move follows OpenAI’s effort to convince developers to build tools with its AI models at its 2024 DevDay.

The San Francisco-based research lab said that the company has over 3 million developers building with its AI models.

OpenAI Chief Product Officer Kevin Weil said the recent departures of CTO Mira Murati and Chief Research Officer Bob McGrew slow innovation.

As part of its DevDay announcements, OpenAI will help developers improve the performance of GPT-4o for tasks involving visual understanding.

Also, OpenAI said it won’t release any new AI models during DevDay this year. TechCrunch reported that the video generation model Sora will have to wait a little longer.

En Español

En Español