IBL News | New York

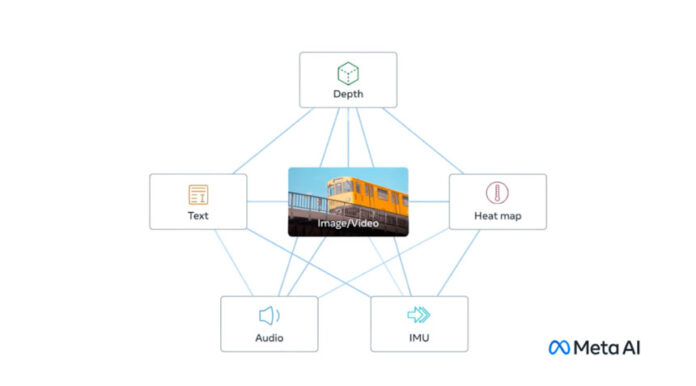

Meta/Facebook announced a new open-source multisensory model that links together six types of data (text, audio, visual data, thermal infrared images, and movement readings), pointing to a future of generative AI that creates immersive experiences by cross-referencing this info.

This model is a research model, a paper, with no immediate consumer or practical applications, and speaks in favor of Meta/Facebook since OpenAI and Google are developing these models secretively, according to experts.

For example, AI image generators like DALL-E, Stable Diffusion, and Midjourney all rely on systems that link together text and images during the training stage, as they follow users’ text inputs to generate pictures. Many AI tools generate video or audio in the same way.

Meta’s underlying model, named ImageBind, is the first one to combine six types of data into a single embedding space.

Recently, Meta open-sourced LLaMA, the language model that started an alternative movement to OpenAI and Google. With ImageBind, it’s continuing with this strategy by opening the floodgates for researchers to try to develop new, holistic AI systems.

• “When humans absorb information from the world, we innately use multiple senses, such as seeing a busy street and hearing the sounds of car engines. Today, we’re introducing an approach that brings machines one step closer to humans’ ability to learn simultaneously, holistically, and directly from many different forms of information — without the need for explicit supervision (the process of organizing and labeling raw data),” said Meta in a blog-post.

• “For instance, while Make-A-Scene can generate images by using text prompts, ImageBind could upgrade it to generate images using audio sounds, such as laughter or rain.”

• “Imagine that someone could take a video recording of an ocean sunset and instantly add the perfect audio clip to enhance it, or when a model like Make-A-Video produces a video of a carnival, ImageBind can suggest background noise to accompany it, creating an immersive experience.”

• “There’s still a lot to uncover about multimodal learning. We hope the research community will explore ImageBind and our accompanying published paper to find new ways to evaluate vision models and lead to novel applications.”

En Español

En Español