IBL News | New York

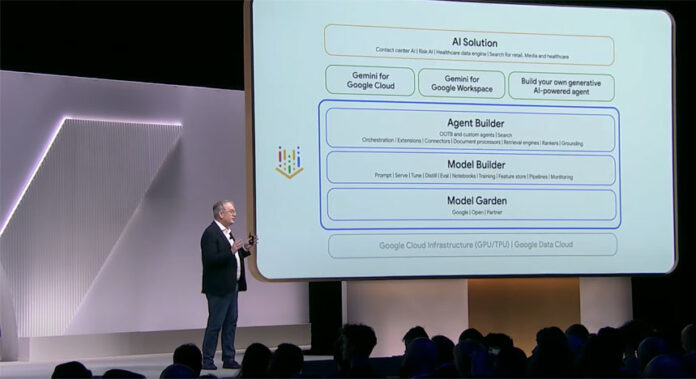

Google launched this month, during its Cloud Next 2024 event, Vertex AI Agent Builder, a no-code console for developers to create production-grade AI agents using natural language.

It uses open-source frameworks like LangChain on Vertex AI. Developers create agents by defining the goal, providing step-by-step instructions, and sharing conversational examples.

They can stitch together multiple agents, with one agent functioning as the main agent and others as subagents for complex goals. Agents can call functions or connect to applications to perform tasks for the user.

Vertex AI Agent Builder can improve accuracy and user experience by grounding model outputs using vector search or Google Search to build custom embeddings-based RAG systems.

“Vertex AI Agent Builder allows people to very easily and quickly build conversational agents,” said Google Cloud CEO Thomas Kurian [video].

During the same event, Google also announced Gemini 1.5 Pro, which can process up to 1 million tokens, around four times the amount of data that Anthropic’s Claude 3 model can handle and eight times as much as OpenAI’s GPT-4 Turbo.

This allows tasks like analyzing code libraries, reasoning across lengthy documents, and holding long conversations.

Google has made Gemini 1.5 Pro available in a public preview via the Gemini API in Google AI Studio.

Meanwhile, Google’s Vertex AI Model Garden is expanding the variety of available open-source models, currently providing developers with over 130 curated models.

.

The next big breakthrough in AI is AI Agents. This is when AI goes from being used as an assistant to chat with, to using AI to accomplish complete tasks that a human might otherwise have to perform. This moves AI from being a "read-only" operation to fundamentally a "read/write"…

— Aaron Levie (@levie) April 21, 2024

En Español

En Español

![OpenAI Released Apps that Work Inside ChatGPT and an SDK [Video]](https://iblnews.org/wp-content/uploads/2025/10/openaieventday-218x150.jpg)

![Apple Marketed Its New iPhones As a Best-In-Class Hardware, Not As an AI Device Maker [Video]](https://iblnews.org/wp-content/uploads/2025/09/iPhoneair-218x150.jpg)