No articles available

TOP NEWS

Runway Released a Video Model that Outperformed Veo 3 and Sora 2 Pro on An Benchmark

Google Launches "Workspace Studio", a New AI Productivity Tool for Business

Harvey, With $100M in ARR, Reaches a Valuation of $8B, Doubling Its Valuation

MIT Says that AI Will Reshape the Labor Market, Replacing 11.7% of the U.S. Workforce

Anthropic Introduced Its Latest Model 'Claude Opus 4.5'

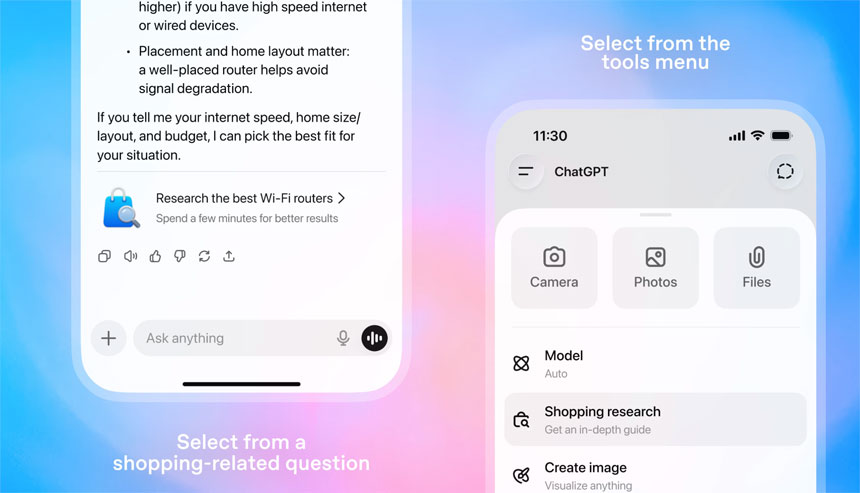

ChatGPT Rolls Out a Shopping Search Feature that Offers Personalized Buyer Guides

China Prioritizes an Economy Based on AI and Robot-Driven Factories

A Two-Track Economy: Only Infrastructure and Chips Are Booming

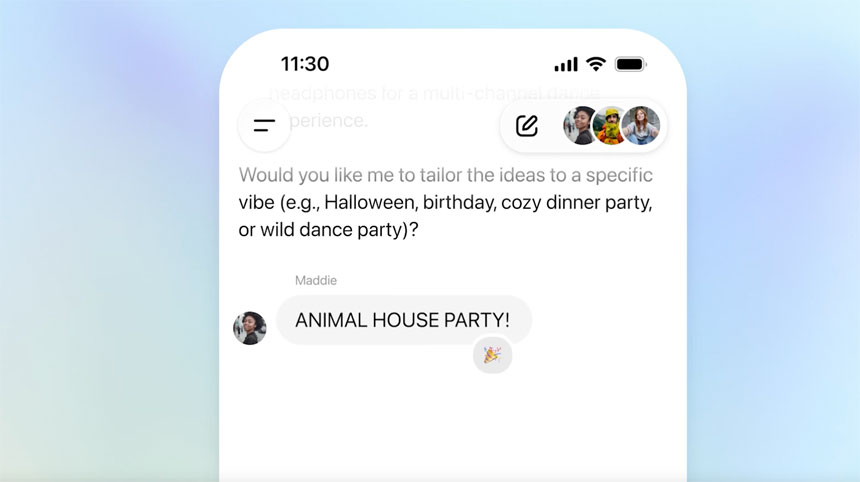

OpenAI Unveils Group Chats to Bring People Into the Same Conversation

Genespark, a Startup that Offers AI Workplace Agents, Reaches a Unicorn Valuation

AI LITERACY

ibl.ai

Today's Summary

Monday, December 8, 2025

Education technology today is marked by rising AI adoption among educators and innovative personalized learning approaches.

Videos

Loading videos...

Today in AI & EdTech

Monday, December 8, 2025

AI is transforming the education technology landscape as more teachers adopt intelligent tools, driving forward and adaptive learning experiences.

Today in Education

U.S. Department of Education Announces New Funding for STEM Programs

The initiative aims to support science, technology, engineering, and mathematics education.

Global Education Summit Highlights Digital Learning Innovations

Leaders from around the world discuss the future of remote and hybrid learning models.

New Study Shows Benefits of Early Childhood Education

Research indicates significant long-term academic and social advantages for students.